Have you ever been turned down for a loan, served a “recommended” news story, or stared at a streaming suggestion and wondered—why did this happen? Was it luck, chance, or something deeper? More often than not, there’s an invisible decision-maker behind these mysteries: an AI algorithm. In this article, let’s lift the curtain on what goes on inside these “black boxes,” and explore the vital need for AI algorithmic transparency in our everyday digital lives.

1. Why Should We Care About AI Algorithmic Transparency?

Consider the case of Apple Card’s gender-biased credit limits. Customers reported that Apple’s credit card, backed by Goldman Sachs, offered men higher credit limits than women with similar profiles.

Why It Failed: Opaque algorithms and unregulated data interpretation led to discrimination.

Lesson: Fintech AI must prioritize fairness, transparency, and explainability in decision-making.

This real-world example illustrates the power—and the danger—of modern AI systems. When algorithms make decisions that affect our jobs, health, finances, or even the ads we see online, we have a right to know how and why these decisions are made. This is what AI algorithmic transparency is all about: making sure the logic, data, and reasoning behind AI-powered decisions can be understood and fairly challenged.

2. What is AI Algorithmic Transparency?

AI algorithmic transparency means being open and honest about:

- How an AI system works,

- What data does it use?

- The steps it takes to reach conclusions,

- And offering explanations in language everyone can understand.

As explained in a recent walk-through by TechTarget, transparency isn’t just about releasing source code. It means helping normal people—like you and me—understand the basics of decision-making, so we’re not left in the dark about choices that impact our lives.

3. The Black Box Problem: Why Algorithms Are So Mysterious

Most modern AI, especially deep learning, works like a “black box.” This means the process from input to output is so complex that even the creators can’t always pinpoint exactly why a decision was made. For example, a spam filter learns millions of subtle patterns in emails to decide what’s “junk,” but can’t always explain why a specific message gets flagged.

I read this in a recent article on Coursera: “As AI models become more sophisticated, their decisions often appear mysterious, opaque, or even magical—making it hard for outsiders to hold them accountable.”

The “Recipe” Example

Imagine baking a cake using a random mystery recipe. If the cake comes out wrong, but you don’t know what went into it or what steps were taken, how would you improve or even trust the process again? This is what the black box problem feels like in AI.

4. Why AI Algorithmic Transparency Matters for EVERYONE

You don’t need a technical degree to be affected by algorithmic decisions—or to demand AI algorithmic transparency. Let’s see how it touches everyday life:

- Job Applications: Ever sent a resume and never heard back? Many companies use AI to filter candidates. If you’re rejected, you deserve to know why.

- Healthcare: Some hospitals use AI to suggest diagnoses. If a test is skipped or a treatment is missed, knowing the “why” could be life-saving.

- Loans and Credit: As in my earlier example, when AI decides your financial future, transparency is crucial so mistakes can be caught and corrected.

5. Explainability vs. Transparency: What’s the Difference?

Here’s where I’d like to get a little technical—but keep it simple!

- Transparency asks: “Can we open the lid and see how the algorithm works?”

- Explainability asks: “Can we explain, in plain language, each outcome or decision made?”

An article from SAP puts it this way: Even if the math is complex, companies are responsible for making sure results can be clearly explained—even to a layperson. This helps build trust, allows for appeals, and keeps harmful mistakes in check.

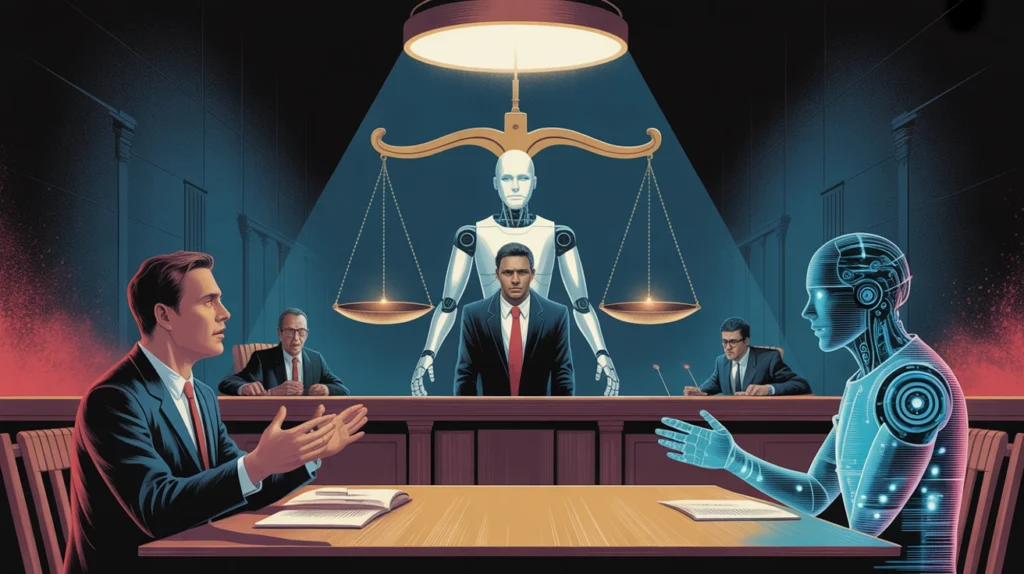

6. Laws and Rights: Your Right to an Answer

Did you know that in places like Europe, laws such as the GDPR guarantee your “right to explanation” when a computer makes decisions with significant consequences? According to IBM and ISO guidelines, companies are expected to:

- Provide clear summaries of how algorithms work,

- Disclose what data is used,

- And explain decisions that affect individuals.

Related Post: Privacy & Data Protection in AI: Safeguarding Your Digital Life in the Age of Smart Machines

7. Real-World Examples: When the Black Box Goes Wrong

AI algorithmic transparency isn’t just a nice-to-have. Lack of it has already led to real harm:

- Hiring Discrimination: I read about a large company’s recruiting AI that repeatedly favored candidates of a certain gender. No one caught the bias for years because no one checked how decisions were being made.

- Criminal Justice Algorithms: Some courts use software to predict a defendant’s risk of reoffending. These scores have been shown to be unfairly biased—yet often, neither the judge nor the defendant fully understands how the score was determined.

- Healthcare Denials: In one notable report, insurance companies used AI to fast-track claims—sometimes denying care for reasons both doctors and patients couldn’t grasp.

Related Post: Humans vs. AI: Why Human Oversight in AI Still Matters

8. Building Trust: How Can We Make Algorithms More Transparent?

According to a recent WRITER beginner’s guide and other industry guidelines, building real AI algorithmic transparency involves:

- Encouraging companies to publish “algorithmic transparency statements” (clear, readable summaries of how AI works),

- Independent audits: Letting neutral third parties inspect AI systems for fairness, accuracy, and bias,

- Open-source models: When possible, make code public so outside experts can review and improve it,

- Human review panels: Where AI can affect real lives, humans should be able to double-check critical decisions.

9. Who’s Responsible? Companies, Coders, or Us?

Many experts now believe that responsibility for AI transparency is shared. As the ISO report states, “Creators must ensure transparency is built-in, organizations must communicate openly, and users must demand clarity and fairness”.

For a non-technical reader, this means: You are absolutely allowed to ask questions about algorithmic decisions, expect clear answers, and hold companies to account when technology fails or behaves unfairly.

10. Everyday Tips: How to Protect Yourself and Demand AI Algorithmic Transparency

- Ask for explanations: If a computer denies you a loan, job, or service, politely request a clear reason in simple language.

- Check privacy policies: Good companies will state when and how they use AI.

- Support “right to explanation” laws: These empower consumers everywhere.

- Spread awareness: Talk with friends and family—especially those less digitally savvy—about the importance of understanding algorithmic decisions.

11. Conclusion: Demanding a Fairer, More Open Digital World

As someone who’s spent time reading articles and reports across the internet, I can tell you: Algorithmic transparency isn’t just for tech experts. It’s a basic right in a world where AI shapes more decisions than ever before.

We all benefit when “the secret life of algorithms” is brought into the light. Fairness, explainability, and accountability are the foundations of AI Ethics—and transparency is the window that lets us check what’s happening inside. Remember, you don’t have to settle for “computer says no.” In fact, demanding explanations and openness will make AI better for everyone.

So next time you bump into an automated decision that affects your life, don’t be afraid to ask: How did this happen? Who designed this? On what data? That’s AI algorithmic transparency in action—and it’s how we all can help shape a fairer digital future.

Pingback: AI Ethics Explained: Are We Playing God with Artificial Intelligence?

Pingback: AI Ethics and Responsible AI: An Easy Guide for Everyone